<center>07-给定区域WW3试验(2022寒假安排)</center>

文档托管

—————————————CCMP

参考资料

官网:https://www.remss.com/measurements/ccmp/

2022-01-17 可以打开

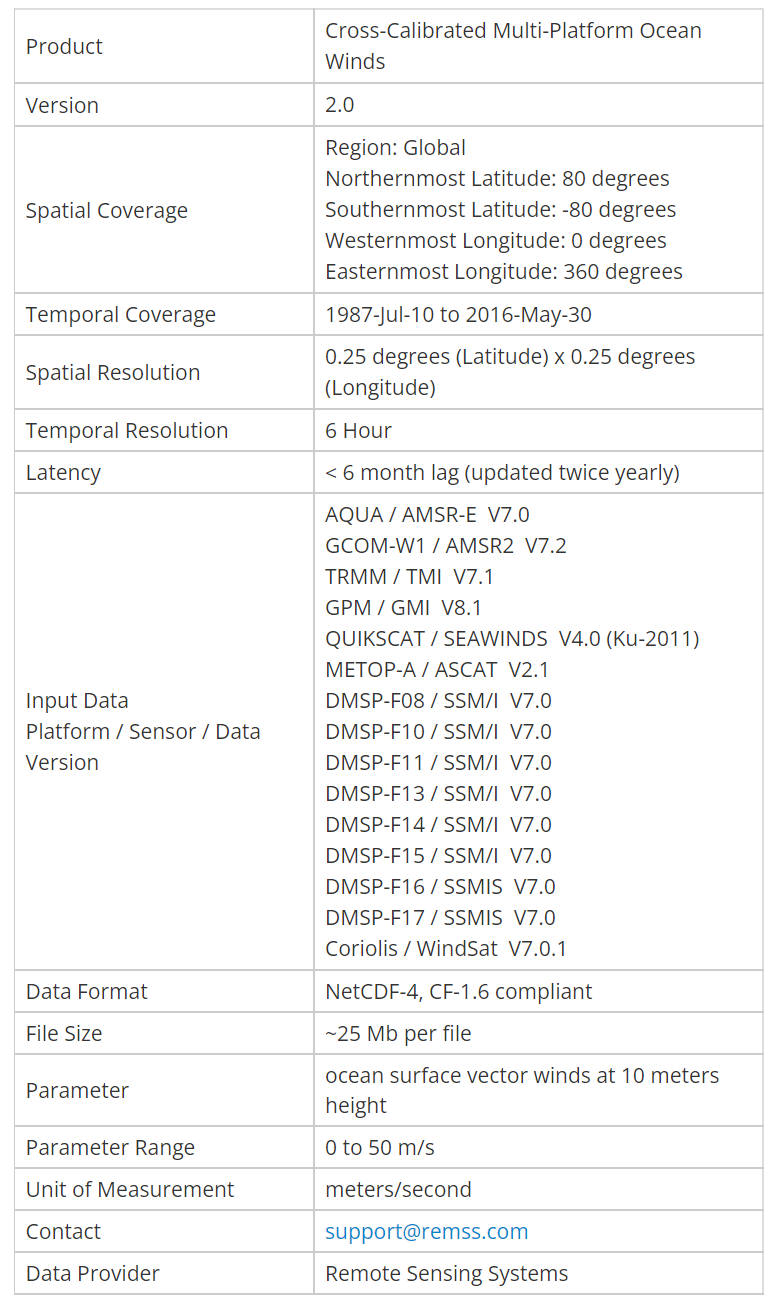

CCMP Wind Vector Analysis Product

2022-02-04 可以打开

来自于Wjc老师发的网站;

readme_ccmp.pdf来自于CCMP Wind Vector Analysis Product;

CCMP Wind Vector Analysis Product (2022-01-17)

Version 2 CCMP Wind Product

The Cross-Calibrated Multi-Platform (CCMP) gridded surface vector winds are produced using satellite, moored buoy, and model wind data, and as such, are considered to be a Level-3 ocean vector wind analysis product. We have updated the CCMP product, using improved and additional input data.

This web site documents the new CCMP V2.0 data set now available from Remote Sensing Systems (RSS).

The V2 CCMP processing now combines Version-7 RSS radiometer wind speeds, QuikSCAT and ASCAT scatterometer wind vectors, moored buoy wind data, and ERA-Interim model wind fields using a Variational Analysis Method (VAM) to produce four maps daily of 0.25 degree gridded vector winds.

讲了此数据,用到了哪些数据,用什么方法。

The original CCMP product (V1.1) was originally produced by Bob Atlas and his team with funding from the NASA REASoN and MEaSUREs programs. The original MEaSUREs product is still available from the NASA Physical Oceanography DAAC (podaac.jpl.nasa.gov).

RSS has transitioned the CCMP processing code to run using our most up-to-date satellite data observations. All methodology remains the same as that used in the original CCMP product and most of the CCMP processing code is unchanged, with only minor alterations to compensate for the different operating systems and compilers.

transition 转换

compensate 补偿

Introduction

Gap-free ocean surface wind data of high quality and high temporal and spatial resolution are useful for a variety of purposes and are necessary for studying large scale air-sea interactions affecting the atmosphere and the ocean. Ocean vector winds are dynamic, continually evolving over short time scales. This characteristic makes the production of global, gridded, gap-free wind fields a challenge, especially at temporal scales of less than one day and spatial scales smaller than typical wind features.

Accurate research requires ocean vector wind data for a long enough time period to resolve wind-induced patterns such as the El Niño-Southern Oscillation (ENSO) and the Madden-Julian Oscillation (MJO). Remote Sensing Systems has invested many years of research into validating and cross-calibrating passive and active microwave wind retrievals from satellites.

介绍了数据应该需要反应相关现象;

passive 被动的

retrieval 挽回

The Variational Analysis Method (VAM) of data assimilation was utilized by Atlas et al. [1996, 2011] to find the most dynamically suitable way to combine satellite observations and in situ wind measurements into gap-free wind fields. The VAM generates a gridded surface wind analysis which minimizes an objective function measuring the misfit of the analysis to the background, the data and certain a priori constraints.

介绍VAM方法及其作用;

The CCMP wind analyses are at temporal and spatial resolutions suitable for scientific study. The CCMP Version 2.0 (V2) data product described here is a continuation of the highly-used original CCMP product (V1.1 available from NASA JPL PO.DAAC) and builds on the decades of careful VAM development. Two peer-reviewed publications (Atlas et al. 1996, Atlas et al., 2011) describe the original CCMP product and (Hoffman et al, 2003) describes the VAM. This documentation describes the new CCMP V2.0 product available from Remote Sensing Systems.

The original V1.1 CCMP product was processed incrementally, over a number of years, in small batches using the satellite data available at the time of processing, and therefore, <font color='red'>assimilated inconsistently processed satellite winds</font>. That is, earlier V4 through V6 RSS winds were used for early production years with V7 winds for the later few years of production. Funding for the original CCMP (V1.1) ended in 2012 with no CCMP winds produced since December 2011. There has been considerable demand for the continuation of the CCMP product, so we have transitioned the code to RSS with the purpose of meeting several distinct goals:

- Extend the product to the present time and continue processing into the future.

- Reprocess using a consistent version of satellite data.

- Add newly available sensors.

- Utilize a higher resolution and consistently produced background model wind field throughout the analysis product.

incrementally 递增的

We provide the Level-3.0 (L3.0) and Level-3.5 (L3.5) CCMP V2.0 products in netCDF4 format.

- The L3.0 CCMP V2.0 product contains four daily maps (00, 06, 12, and 18Z) of U and V vector wind components on a 0.25 degree global grid. The U and V components are relative to true north. The L3.0 data also includes maps of the number of observations (both satellite and in situ) that were analyzed at each location.

- The L3.5 CCMP V2.0 contains monthly winds averaged over the calendar month. Vector averages are computed for U and V. The average wind W is computed using a scalar average.

- Updates to the CCMP V2.0 product will be made roughly twice a year, to complete processing through December and June.

- The most recent update occurred in March 2018, and CCMP is now updated through December 30, 2017.

Product Development

The CCMP data set combines cross-calibrated satellite microwave winds and instrument observations using a Variational Analysis Method (VAM) to produce high-resolution (0.25 degree) gridded analyses.

Satellite wind retrievals derived by Remote Sensing Systems from a number of satellite-borne passive and active microwave instruments are used. RSS intercalibrates radiometers at the brightness temperature level to within 0.2 degree Celsius, applying a refined sea-surface emissivity model and radiative transfer function to derive surface winds. The resulting wind retrievals are highly consistent between microwave radiometer instrument platforms, including SSM/I, SSMIS, AMSR, TMI, WindSat, and GMI. RSS has also developed a geophysical model function for deriving wind speeds and directions from microwave scatterometers, including QuikSCAT and ASCAT.

Both radiometer and scatterometer data are validated against ocean moored buoys, which confirm the measurements are in agreement (to within 0.8 m/s) despite the difference in wind measurement and retrieval methodologies.

The VAM combines RSS instrument data with moored buoy measurements and a starting estimate (first-guess) of the wind field.

The European Center for Medium-Range Weather Forecasts (ECMWF) ERA-Interim Reanalysis winds are used in the CCMP V2.0 processing as the first-guess wind field. This 0.25 deg model wind field is consistently processed, as opposed to that of the ECMWF operational model for which the model changes over time.

All wind observations (satellite and buoy) and model analysis fields are referenced to a height of 10 meters.

CCMP VERSION-2 UPDATES

RSS cooperated with Dr. Ross Hoffman and Mark Leidner to transition the maintenance, update, and continued processing of CCMP. Once the transition was confirmed to be accurately functioning, RSS conducted a full reprocessing of the CCMP data set bringing it out of first-look status and delivering a consistently-reprocessed V2.0 data product for public use in scientific research. The version changes associated with the RSS V2.0 CCMP release include:

- Use of uniform inputs and satellite retrievals, with all satellite winds produced using RSS Version-7 (or higher) Ocean Radiative Transfer Model (ORTM) and a consistent processing methodology.

- Extension of the CCMP data set to July 2015 with plans to provide bi-annual extensions and updates to the dataset.

- Addition of winds from new instruments: ASCAT Metop-A, AMSR2, and GMI.

- Upgrade of the first-guess background wind field. Now using the 0.25-deg, 6-hourly ERA-Interim Reanalysis winds.

- Use of improved moored buoy data with better quality control, including winds from NDBC, TAO, TRITON, RAMA, PIRATA, and Canadian buoys.

- Upgrade of the data file format to netCDF4 with CF-1.6 metadata. Each daily file contains four 6-hourly wind analyses and self-describing metadata.

A time series of all input data used in the VAM is shown below. Passive radiometers are plotted in red and active scatterometers are plotted in green. The version of data used for each instrument is provided. The moored buoy winds and ERA-Interim Reanalysis winds are available for the entire data range. Quality controlled buoy data have been obtained from the Pacific Marine Environmental Lab (PMEL), from the National Data Buoy Center (NDBC), and from the Fisheries and Ocean Canada Oceanography and Scientific Data branch (OSD).

Full reprocessing of the entire data set takes approximately 1-2 months. Twice per year, in January and July, we will update the data product to include the past 6 months. For example, in January 2017, we will release June 2016 to December 2016. In transitioning the code to run at RSS, special care was taken to make no changes to the overall approach or the methodology of the original CCMP product. The code was changed, only where necessary, to account for changes in system architecture and compilers. Source code that was needed for the addition of new satellite winds (AMSR2 or GMI) was adapted from already existing code. This type of careful transition ensures that the same high-quality CCMP product is still produced, but with updated satellite, buoy and model wind data as input.

Data Product Format

The CCMP V2.0 Level-3.0 (L3.0) winds are available as netCDF-4 data files. Each L3.0 daily data file contains 3 arrays of size 1440 (longitude) by 628 (latitude for range -78.375 to 78.375) by 4 (time of 0Z, 06Z, 12Z, 18Z). Two of the arrays are the U and V wind components in meters/second (m/s). Standard U and V coordinates apply, meaning the positive U is to the right and positive V is above the axis. U and V are relative to true north. CCMP winds are expressed using the oceanographic convention, meaning a wind blowing toward the Northeast has a positive U component and a positive V component. The third array in the file is the number of observations (nobs) used to derive the wind components. A nobs value of 0.0 means that the wind vector for that grid cell is very close to the wind vector from the background field because no satellite or moored buoy wind data were available to directly influence the analyzed vector wind.

The CCMP V2.0 Level-3.5 (L3.5) winds are available as netCDF-4 data files. Each L3.5 monthly data file contains 3 arrays of size 1440 (longitude) by 628 (latitude for range -78.375 to 78.375) by 1 (time centered on the middle of the calendar month). The first two arrays are the vector-averaged U and V wind components in meters/second (m/s). The third array contains the scalar-averaged wind speed W for each location. Note that in regions with wind directions that vary substantially over time, W can be much higher than the magnitude of the vector-average components U and V.

A fill value of -9999.0 is used for any grid cell without any data. This should rarely happen. Longitude is given in degrees East from 0.125 to 359.875 and latitude is given in degrees North with negative values representing southern locations. While this is referred to as a global wind product, the extent of the data is -79 degrees to 79 degrees latitude. The time in the file is given as hours since midnight on Jan 1, 1987.

The netCDF file contains CF 1.6-compliant self-describing metadata. The JPL metadata compliance checker was used to assess compliance. The RSS CCMP V2.0 data set is distributed as L3.0 daily and L3.5 monthly files. The V1.1 CCMP data set available from PO.DAAC also contained L2.5 (vectors on satellite data) and L3.5 pentad data products. However, RSS has chosen to not distribute the L2.5 or L3.5 pentad data products at this time.

MISSING DATA

There are gaps within these data. There are 11 days scattered between May and December of 1988 and 14 days in January through July 1989 that do not appear on our FTP server

During this time period, F08 is the only operational satellite input into the dataset. Days that are missing are because there was either very little or no data collected by the satellite on that day.

In future reprocessing, we may include these files for completeness, but the CCMP wind field would essentially be just the ERA-Int background except for minor differences near buoys.

Read Routines / Data Access

The CCMP netCDF4 files can be explored using tools such as Panoply or ncBrowse. Matlab, IDL, and Python have built-in routines for reading netCDF files. We have provided a sample read routine in Python. The routine will plot and display the data for an example file. CCMP data files can be obtained from RSS by ftp and http.

remss:remote sensing system

File names have the structure CCMP_Wind_Analysis_YYYYMMDD_V02.0_L3.0_RSS.nc where YYYY is the 4-digit year, MM the month and DD the day-of-month. The files are stored in v02.0/Yyyyy/Mmm/ directory structure.

Browse Images / Graphic Maps

The following movies loop through the full 28 years of data.

已上传至个人B站:

CCMP Wind Speed Anomaly Animation

已上传至个人B站:

Product Details

Known Issues / Data Caveats

The following information should be taken into consideration when using this data product:

- Users should not consider these winds suitable for studying global trends. While the winds are derived from consistently processed satellite data suitable for climate study, the act of assimilating the model data into an analysis product potentially introduces spurious trends that may exist in the background wind field. These CCMP V2.0 winds may, however, be suitable for studying regional trends and patterns.

- Caution should be used when studying high wind regions. We have noted differences between satellite data and the CCMP winds at high wind speeds (>25 m/s), where the background model wind is known to underestimate wind events. Since the VAM method attempts to find a consistent wind field solution somewhere between the satellite-observed high wind events and the lower model winds for those same events, CCMP, as a wind analysis product, will inevitably be lower than the satellite data indicates. Note, wind events of this magnitude tend to be infrequent and geographically limited.

- The V2.0 winds are different from the V1.1 winds. Users should not compare or mix the two products. Differences between the versions do exist and are related to the changes in the satellite data and background wind field. For example, the new Ku-2011 GMF QuikSCAT winds are much improved at high wind speeds resulting in lower CCMP V2.0 winds at speeds greater than 25 m/s when compared to the CCMP V1.1 winds.

Related Data

The original CCMP V1.1 FLK data are available at the NASA Physical Oceanography DAAC (podaac.jpl.nasa.gov)

References

Atlas, R., R. N. Hoffman, J. Ardizzone, S. M. Leidner, J. C. Jusem, D. K. Smith, D. Gombos, 2011: A cross-calibrated, multiplatform ocean surface wind velocity product for meteorological and oceanographic applications. Bull. Amer. Meteor. Soc., 92, 157-174. doi: 10.1175/2010BAMS2946.1

Atlas, R., R.N. Hoffman, S.C. Bloom, J.C. Jusem, and J. Ardizzone, 1996: A multiyear global surface wind velocity dataset using SSM/I wind observations. Bull. Amer. Meteor. Soc., 77, 5, 869-882.

Hoffman, R. N., M. Leidner, J. M. Henderson, R. Atlas, J. V. Ardizzone, and S. C. Bloom, 2003: A two-dimensional variational analysis method for NSCAT ambiguity removal: methodology, sensitivity, and tuning. Journal of Atmospheric & Oceanic Technology, 20, 585-605.

The original V1.1 Cross-Calibrated Multi-Platform Ocean Surface Wind Vector Analyses User Guide (.pdf) available from JPL PO.DAAC.

How to Cite These Data

Continued production of this data set requires support from NASA. We need you to be sure to cite these data when used in your publications so that we can demonstrate the value of this data set to the scientific community. Please include the following statement in the acknowledgement section of your paper:

“CCMP Version-2.0 vector wind analyses are produced by Remote Sensing Systems. Data are available at www.remss.com.”

DATA SET CITATION

Wentz, F.J., J. Scott, R. Hoffman, M. Leidner, R. Atlas, J. Ardizzone, 2015: Remote Sensing Systems Cross-Calibrated Multi-Platform (CCMP) 6-hourly ocean vector wind analysis product on 0.25 deg grid, Version 2.0, [indicate date subset, if used]. Remote Sensing Systems, Santa Rosa, CA. Available online at www.remss.com/measurements/ccmp. [Accessed dd mmm yyyy]. *Insert the appropriate information in the brackets.

JOURNAL REFERENCE

Atlas, R., R. N. Hoffman, J. Ardizzone, S. M. Leidner, J. C. Jusem, D. K. Smith, D. Gombos, 2011: A cross-calibrated, multiplatform ocean surface wind velocity product for meteorological and oceanographic applications. Bull. Amer. Meteor. Soc., 92, 157-174. doi: 10.1175/2010BAMS2946.1

Mears, C. A., Scott, J., Wentz, F. J., Ricciardulli, L., Leidner, S. M., Hoffman, R., & Atlas, R. ( 2019). A Near Real Time Version of the Cross Calibrated Multiplatform (CCMP) Ocean Surface Wind Velocity Data Set. Journal of Geophysical Research: Oceans, 124, 6997– 7010. https://doi.org/10.1029/2019JC015367

参考资料

https://blog.csdn.net/qq_38882446/article/details/111371015

matlab, web 函数

http://blog.sina.com.cn/s/blog_aed5bd1d0102wusz.html

matlab, urlwrite 函数

https://www.ilovematlab.cn/thread-253224-1-1.html?s_tid=RelatedContent

matlab,判断选择的日期不存在

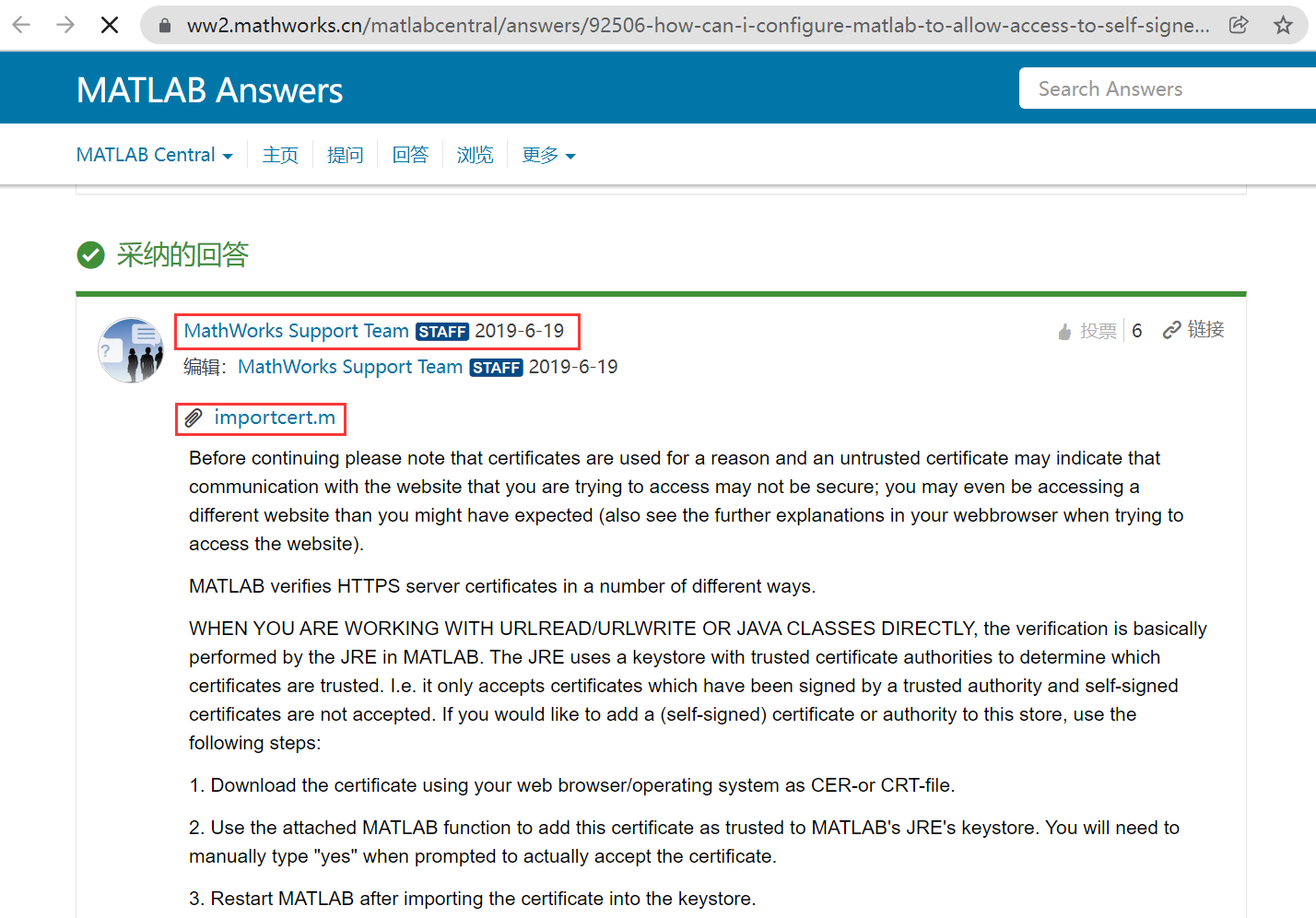

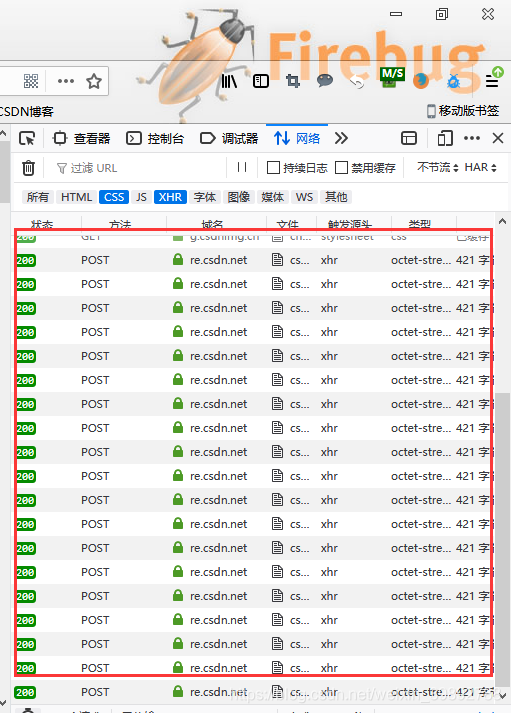

下载CCMP数据(matlab, urlwrite 函数)

下载所需程序 download_ccmp.m:

%% 说明

% ccmp v02.0 数据下载网址:https://data.remss.com/ccmp/v02.0/

%% L3.0数据下载

filepath='D:\ccmp\data_L3\'; mkdir(filepath); %创建相应文件夹,下载的数据保存到此文件夹;(注意,路径的最后面必须为 \ );

% url特点:需要3个通配符

% https://data.remss.com/ccmp/v02.0/Y1990/M02/CCMP_Wind_Analysis_19900201_V02.0_L3.0_RSS.nc

% 1987:1:2019

% 01:1:12

% 01:1:31

% 最全的通配符

% year = num2str([1987:1:2019]'); year(2,:); size(year);%通配符 year;

% month = num2str([1:1:12]','%02d'); month(2,:); %通配符 month;

% day = num2str([1:1:31]','%02d'); day(2,:); %通配符 day;

% 应用中的通配符

year = num2str([2011]'); %通配符 year;

month = num2str([9]','%02d'); %通配符 month;

day = num2str([1:10]','%02d'); %通配符 day;

for i=1:1:size(year,1)

for j=1:1:size(month,1)

for k=1:1:size(day,1)

% 判断日期存不存在

ts = [year(i,:),'-',month(j,:),'-',day(k,:)];

try

tf = isdatetime(datetime(ts)); %不用try,这一行会报错。

catch

tf = 0;

end

if(tf==1) %日期存在

%https://data.remss.com/ccmp/v02.0/Y1990/M02/CCMP_Wind_Analysis_19900201_V02.0_L3.0_RSS.nc

fullURL=['https://data.remss.com/ccmp/v02.0/Y',year(i,:), ...

'/M',month(j,:), ...

'/CCMP_Wind_Analysis_',year(i,:),month(j,:),day(k,:),'_V02.0_L3.0_RSS.nc']; %下载所需要的url

filename=[filepath,'CCMP_Wind_Analysis_',year(i,:),month(j,:),day(k,:),'_V02.0_L3.0_RSS.nc']; %保存的文件名

tic % 记录下载的时间

[f,status]=urlwrite(fullURL,filename);%下载命令

if status==1 %下载成功

t=toc;

lst=dir(filename); %了解文件的大小

xi=lst.bytes;

disp(['CCMP_Wind_Analysis_',year(i,:),month(j,:),day(k,:),'_V02.0_L3.0_RSS.nc',...

'下载成功,','文件大小为',num2str(xi/1024/1024),'M,',' 花费',num2str(t/60),'分钟。']);

else

disp(['CCMP_Wind_Analysis_',year(i,:),month(j,:),day(k,:),'_V02.0_L3.0_RSS.nc','下载失败。']);

end

else

disp([ts,'日期不存在。']);

end

end

end

end

%% L3.5数据下载

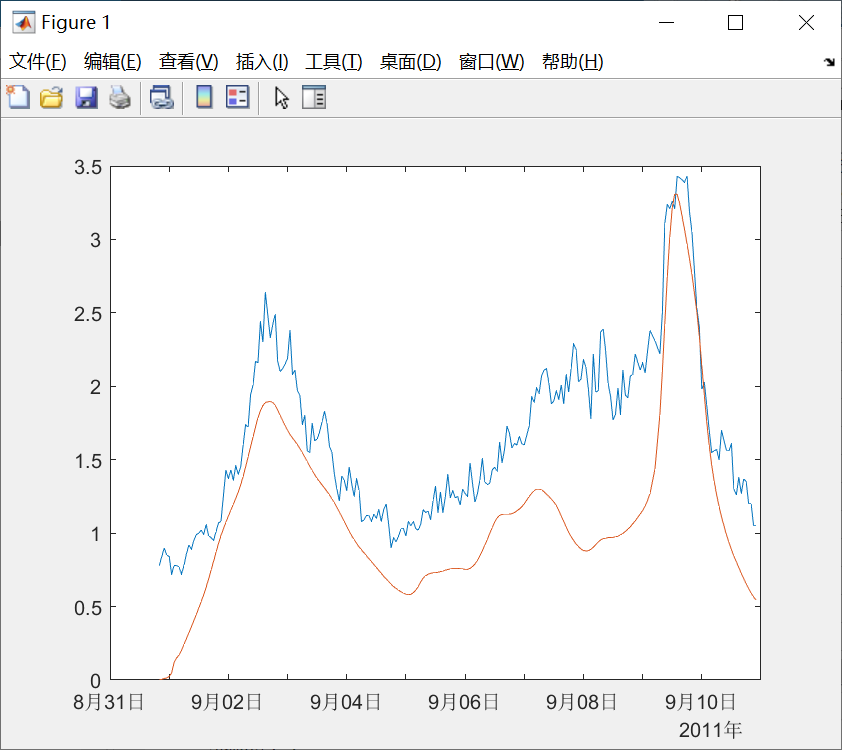

% ...运行程序后,会下载20110901-20110910的数据,可以根据自己需求改变时间段。

参考资料

https://blog.csdn.net/schumacher2016/article/details/82852700

多个netcdf文件的合并(matlab)

https://blog.csdn.net/muse_squirrel/article/details/76014446

Matlab 查阅、读取nc数据

https://ww2.mathworks.cn/help/matlab/ref/netcdf.redef.html

netcdf.reDef

合并CCMP的nc数据(matlab)

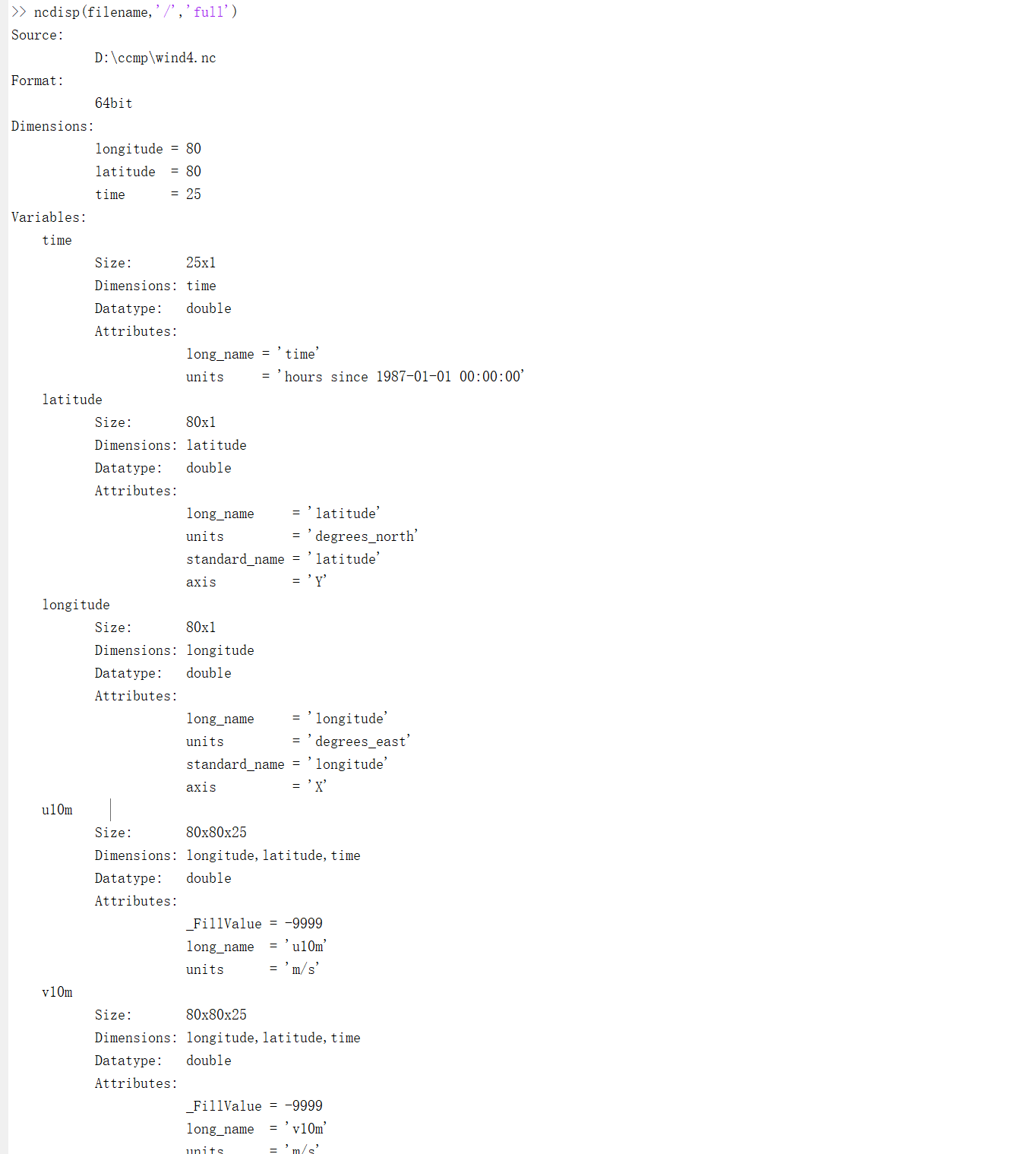

查阅某一个CCMP的nc文件,matlab输出如下:

>> ncdisp(strcat(datadir,filelist(i).name),'/','full')

Source:

D:\ccmp\data_L3\CCMP_Wind_Analysis_20110902_V02.0_L3.0_RSS.nc

Format:

netcdf4_classic

Global Attributes:

contact = 'Remote Sensing Systems, support@remss.com'

Conventions = 'CF-1.6'

data_structure = 'grid'

title = 'RSS CCMP V2.0 derived surface winds (Level 3.0)'

history = '20160212T164116ZZ - netCDF generated from original data using MATLAB 8.5.0 by RSS vam_v2.0.analysis.t20110902.z00.dat; vam_v2.0.analysis.t20110902.z06.dat; vam_v2.0.analysis.t20110902.z12.dat; vam_v2.0.analysis.t20110902.z18.dat'

description = 'RSS VAM 6-hour analyses starting from the ERA-Interim wind analyses'

summary = 'CCMP V2.0 has been created using the same VAM as CCMP V1.1 only it is now running at Remote Sensing Systems. Input data have changed and now include all V7 radiometer data from RSS, V8.1 GMI data from RSS, scatterometer data from RSS (V4 QuikSCAT and V1.2 ASCAT), quality checked moored buoy data from NDBC, PMEL, and ISDM, and ERA-Interim data from ECMWF.'

institute_id = 'RSS'

institution = 'Remote Sensing Systems (RSS)'

base_date = 'Y2011 M09 D02'

comment = 'none'

license = 'available for public use with proper citation'

product_version = 'v2.0'

netcdf_version_id = '4.2'

date_created = '20160212T164116Z'

geospatial_lat_units = 'degrees_north'

geospatial_lat_resolution = '0.25 degrees'

geospatial_lat_min = '-78.375 degrees'

geospatial_lat_max = '78.375 degrees'

geospatial_lon_units = 'degrees_east'

geospatial_lon_resolution = '0.25 degrees'

geospatial_lon_min = '0.125 degrees'

geospatial_lon_max = '359.875 degrees'

creator_name = 'Remote Sensing Systems'

creator_email = 'support@remss.com'

creator_url = 'http://www.remss.com/'

project = 'RSS Cross-Calibrated Multi-Platform Ocean Surface Wind Project'

publisher_name = 'Remote Sensing Systems'

publisher_email = 'support@remss.com'

publisher_url = 'http://www.remss.com/'

contributor_name = 'Joel Scott, Frank Wentz, Ross Hoffman, Mark Leidner, Robert Atlas, Joe Ardizzone'

contributor_role = 'Software Engineer, Project Lead, Co-Investigator, Software Engineer, Principal Investigator, Software Engineer'

processing_level = 'L3.0'

keywords = 'surface winds, ocean winds, wind speed/wind direction, MEaSUREs, 10km - < 50km or approximately 0.09 degree - < 0.5 degree'

keywords_vocabulary = 'GCMD Science Keywords'

references = 'Hoffman et al., Journal of Atmospheric and Oceanic Technology, 2013; Atlas et al., BAMS, 2011; Atlas et al., BAMS, 1996'

Dimensions:

longitude = 1440

latitude = 628

time = 4

Variables:

longitude

Size: 1440x1

Dimensions: longitude

Datatype: single

Attributes:

standard_name = 'longitude'

long_name = 'Longitude in degrees east'

units = 'degrees_east'

_Fillvalue = -9999

axis = 'X'

valid_min = 0.125

valid_max = 359.875

_CoordinateAxisType = 'Lon'

coordinate_defines = 'center'

latitude

Size: 628x1

Dimensions: latitude

Datatype: single

Attributes:

standard_name = 'latitude'

long_name = 'Latitude in degrees north'

units = 'degrees_north'

_Fillvalue = -9999

axis = 'Y'

valid_min = -78.375

valid_max = 78.375

_CoordinateAxisType = 'Lat'

coordinate_defines = 'center'

time

Size: 4x1

Dimensions: time

Datatype: double

Attributes:

standard_name = 'time'

long_name = 'Time of analysis'

units = 'hours since 1987-01-01 00:00:00'

delta_t = '0000-00-00 06:00:00'

avg_period = ''

_Fillvalue = -9999

calendar = 'standard'

axis = 'T'

valid_min = 216240

valid_max = 216258

_CoordinateAxisType = 'Time'

uwnd

Size: 1440x628x4

Dimensions: longitude,latitude,time

Datatype: single

Attributes:

standard_name = 'eastward_wind'

long_name = 'u-wind vector component at 10 meters'

units = 'm s-1'

height = '10 meters above sea-level'

_Fillvalue = -9999

valid_min = -29.0874

valid_max = 28.8251

coordinates = 'time latitude longitude'

vwnd

Size: 1440x628x4

Dimensions: longitude,latitude,time

Datatype: single

Attributes:

standard_name = 'northward_wind'

long_name = 'v-wind vector component at 10 meters'

units = 'm s-1'

height = '10 meters above sea-level'

_Fillvalue = -9999

valid_min = -23.203

valid_max = 32.6429

coordinates = 'time latitude longitude'

nobs

Size: 1440x628x4

Dimensions: longitude,latitude,time

Datatype: single

Attributes:

standard_name = 'number_of_observations'

long_name = 'number of observations used to derive wind vector components'

units = 'count'

_Fillvalue = -9999

ancillary_variables = 'uwnd vwnd'

valid_min = 0

valid_max = 10

coordinates = 'time latitude longitude'这里给出将20110901-20110910的nc文件合并的程序 merge_ccmp.m,可以根据自己需求改变相关参数:

%%

% desciption: merge multiple netcdf files for sepcific domain

% usage:

% 1. filenumber is up to the number of your netcdf file to be processed.

% 2. for different domain you want to process, you can change the number

% in the latitude0, longitude0, uwind0, vwind0.

% author:

% huang xue zhi, dalian university of technology

% revison history

% 2018-09-25 first verison.

%%

clear;clc;

% begin to merge multiple netcdf files,for example,ccmp wind field reanalysis.

% define the data path and filelist

datadir='D:\ccmp\data_L3\';

filelist=dir([datadir,'*.nc']);

% define the total numbers of netcdf files to be processed.

filenumber=size(filelist,1); %全部nc文件的数量

%% batch reading from the netcdf file

for i=1:filenumber

% 查阅nc相关信息

%ncdisp(strcat(datadir,filelist(i).name),'/','min')

%ncdisp(strcat(datadir,filelist(i).name),'/','full')

% batch reading the variable to another arrays.

ncid=[datadir,filelist(i).name];

latitude0=ncread(ncid,'latitude'); %0.25间隔

longitude0=ncread(ncid,'longitude'); %0.25间隔

time(:,i)=ncread(ncid,'time'); % 增加了数组维数,保留信息。

uwind0(:,:,:,i)=ncread(ncid,'uwnd'); % 增加了数组维数,保留信息。

vwind0(:,:,:,i)=ncread(ncid,'vwnd'); % 增加了数组维数,保留信息。

%区域纬度的选择

%latitude=latitude0(74:435);

%longitude=longitude0(80:481);

%uwind(:,:,:,i)=uwind0(80:481,74:435,:,i);

%vwind(:,:,:,i)=vwind0(80:481,74:435,:,i);

latitude=latitude0;

longitude=longitude0;

uwind=uwind0;

vwind=vwind0;

end

%% create the merged netcdf file to store the result.

filename = 'ccmp20110901to10.nc'; %合成的nc文件名称

cid=netcdf.create(filename,'clobber'); % help netcdf.create

%define global attributes

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'Conventions','CF-1.6'); % help netcdf.putAtt

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'geospatial_lat_min','-78.375 degrees');

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'geospatial_lat_max','78.375 degrees');

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'geospatial_lon_min','0.125 degrees');

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'geospatial_lon_max','359.875 degrees');

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'institution','RSS');

% define the variable dimension

dimlon=netcdf.defDim(cid,'longitude',size(longitude,1));

dimlat=netcdf.defDim(cid,'latitude',size(latitude,1));

dimtime=netcdf.defDim(cid,'time',filenumber*4); %每天有4个时间结点

% define the variable and their attributes

varid1=netcdf.defVar(cid,'time','NC_DOUBLE',dimtime);

netcdf.putAtt(cid,varid1,'standard_name','time');

netcdf.putAtt(cid,varid1,'long_name','Time of analysis');

netcdf.putAtt(cid,varid1,'units','hours since 1987-01-01 00:00:00');

netcdf.putAtt(cid,varid1,'delta_t','0000-00-00 06:00:00');

varid2=netcdf.defVar(cid,'latitude','NC_FLOAT',dimlat);

netcdf.putAtt(cid,varid2,'standard_name','time');

netcdf.putAtt(cid,varid2,'units','degrees_north');

netcdf.putAtt(cid,varid2,'long_name','Latitude in degrees north');

netcdf.putAtt(cid,varid2,'_Fillvalue','-9999.0');

netcdf.putAtt(cid,varid2,'axis','Y');

varid3=netcdf.defVar(cid,'longitude','NC_FLOAT',dimlon);

netcdf.putAtt(cid,varid3,'standard_name','longitude');

netcdf.putAtt(cid,varid3,'units','degrees_east');

netcdf.putAtt(cid,varid3,'long_name','Longitude in degrees east');

netcdf.putAtt(cid,varid3,'_Fillvalue','-9999.0');

netcdf.putAtt(cid,varid3,'axis','X');

varid4=netcdf.defVar(cid,'u10','NC_FLOAT',[dimlon dimlat dimtime]);

netcdf.putAtt(cid,varid4,'standard_name','eastward_wind');

netcdf.putAtt(cid,varid4,'long_name','u-wind vector component at 10 meters');

netcdf.putAtt(cid,varid4,'units','m s-1');

netcdf.putAtt(cid,varid4,'_Fillvalue','-9999.0');

netcdf.putAtt(cid,varid4,'coordinates','time latitude longitude')

varid5=netcdf.defVar(cid,'v10','NC_FLOAT',[dimlon dimlat dimtime]);

netcdf.putAtt(cid,varid5,'standard_name','northward_wind');

netcdf.putAtt(cid,varid5,'long_name','v-wind vector component at 10 meters');

netcdf.putAtt(cid,varid5,'units','m s-1');

netcdf.putAtt(cid,varid5,'_Fillvalue','-9999.0');

netcdf.putAtt(cid,varid5,'coordinates','time latitude longitude')

netcdf.endDef(cid);

% end define the varible and attributes

%% write variables value to merged netcdf file

netcdf.putVar(cid,varid1,time);

netcdf.putVar(cid,varid2,latitude);

netcdf.putVar(cid,varid3,longitude);

netcdf.putVar(cid,varid4,uwind);

netcdf.putVar(cid,varid5,vwind);

% 添加存储空间属性

netcdf.reDef(cid); %data mode 不能进行使用 putAtt,故进入 def mode;

lst=dir(filename); xi=lst.bytes;

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'space size',strcat(num2str(xi/1024/1024),'Mb'));

%ncdisp(filename,'/','full');

netcdf.close(cid);运行后,将生成合并的nc文件 ccmp20110901to10.nc,查阅:

>> ncdisp(filename,'/','full')

Source:

D:\ccmp\ccmp20110901to10.nc

Format:

classic

Global Attributes:

Conventions = 'CF-1.6'

geospatial_lat_min = '-78.375 degrees'

geospatial_lat_max = '78.375 degrees'

geospatial_lon_min = '0.125 degrees'

geospatial_lon_max = '359.875 degrees'

institution = 'RSS'

space size = '275.9862Mb'

Dimensions:

longitude = 1440

latitude = 628

time = 40

Variables:

time

Size: 40x1

Dimensions: time

Datatype: double

Attributes:

standard_name = 'time'

long_name = 'Time of analysis'

units = 'hours since 1987-01-01 00:00:00'

delta_t = '0000-00-00 06:00:00'

latitude

Size: 628x1

Dimensions: latitude

Datatype: single

Attributes:

standard_name = 'time'

units = 'degrees_north'

long_name = 'Latitude in degrees north'

_Fillvalue = '-9999.0'

axis = 'Y'

longitude

Size: 1440x1

Dimensions: longitude

Datatype: single

Attributes:

standard_name = 'longitude'

units = 'degrees_east'

long_name = 'Longitude in degrees east'

_Fillvalue = '-9999.0'

axis = 'X'

u10

Size: 1440x628x40

Dimensions: longitude,latitude,time

Datatype: single

Attributes:

standard_name = 'eastward_wind'

long_name = 'u-wind vector component at 10 meters'

units = 'm s-1'

_Fillvalue = '-9999.0'

coordinates = 'time latitude longitude'

v10

Size: 1440x628x40

Dimensions: longitude,latitude,time

Datatype: single

Attributes:

standard_name = 'northward_wind'

long_name = 'v-wind vector component at 10 meters'

units = 'm s-1'

_Fillvalue = '-9999.0'

coordinates = 'time latitude longitude'合并CCMP的nc数据(matlab,专门用于ww3_prnc)

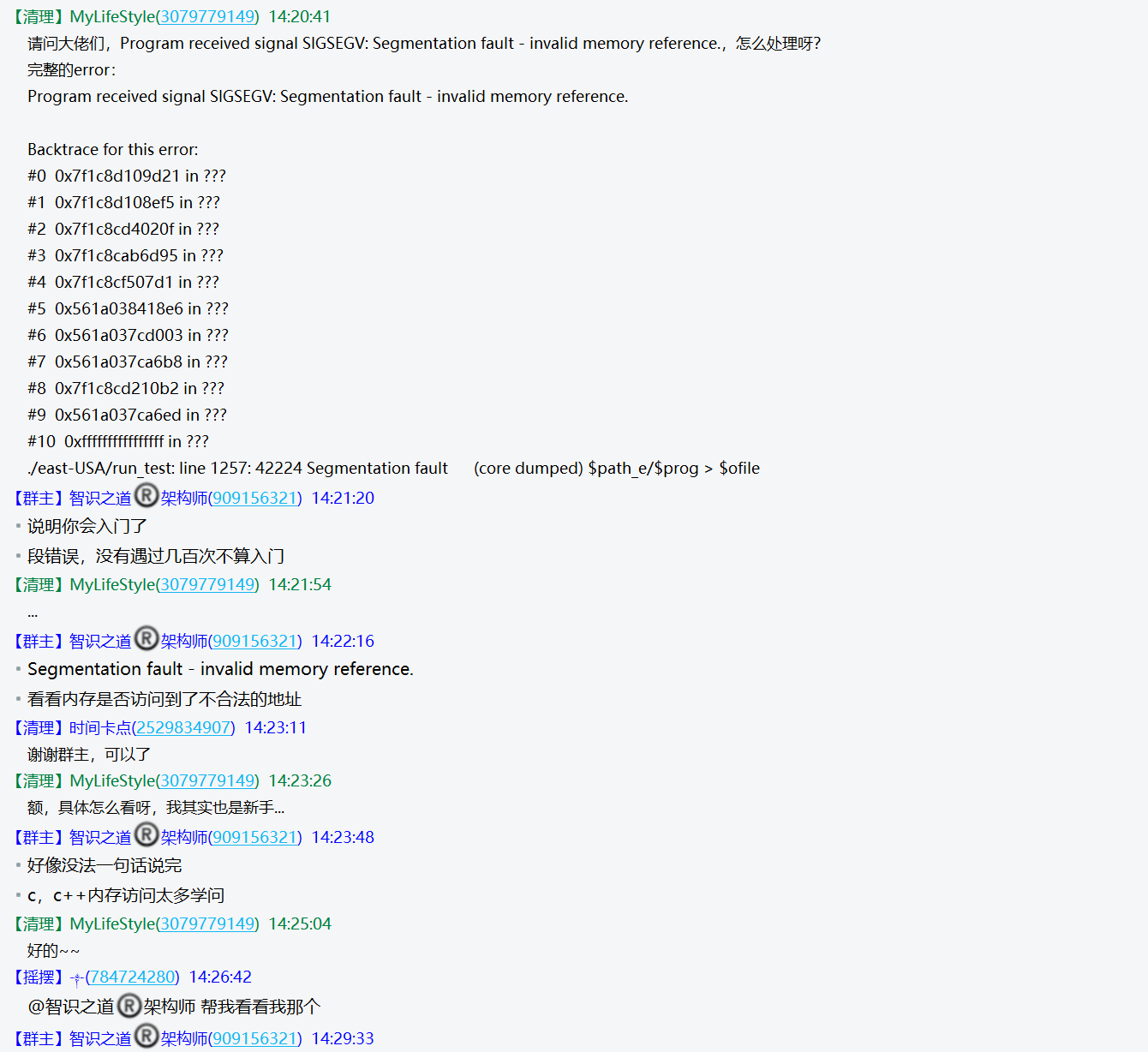

用上面的代码,实现的合并的nc文件,在执行ww3_prnc时,会出现 Segmentation fault - invalid memory reference错误信息,与内存相关,原因是ww3_prnc中 _Fillvalue这一项应该是 _FillValue,且数值应该是 -9999,而不需要加上 ''。

直接使用ccmp的nc文件也会出现上述错误信息。

这里给出将20110901-20110910的nc文件,用于ww3_prnc,合并的程序 merge_ccmp_ww3.m:

%%

% desciption: merge multiple netcdf files for sepcific domain

% usage:

% 1. filenumber is up to the number of your netcdf file to be processed.

% 2. for different domain you want to process, you can change the number

% in the latitude0, longitude0, uwind0, vwind0.

% author:

% huang xue zhi, dalian university of technology

% liu jin can, UPC

% revison history

% 2018-09-25 first verison.

% 2022-02-10 ww3.

%%

clear;clc;

% begin to merge multiple netcdf files,for example,ccmp wind field reanalysis.

% define the data path and filelist

datadir='D:\ccmp\data_L3\';

filelist=dir([datadir,'*.nc']);

% define the total numbers of netcdf files to be processed.

filenumber=size(filelist,1); %全部nc文件的数量

%% batch reading from the netcdf file

for i=1:filenumber

% 查阅nc相关信息

%ncdisp(strcat(datadir,filelist(i).name),'/','min')

%ncdisp(strcat(datadir,filelist(i).name),'/','full')

% batch reading the variable to another arrays.

ncid=[datadir,filelist(i).name];

latitude0=ncread(ncid,'latitude'); %0.25间隔

longitude0=ncread(ncid,'longitude'); %0.25间隔

time(:,i)=ncread(ncid,'time'); % 增加了数组维数,保留信息。

uwind0(:,:,:,i)=ncread(ncid,'uwnd'); % 增加了数组维数,保留信息。

vwind0(:,:,:,i)=ncread(ncid,'vwnd'); % 增加了数组维数,保留信息。

%区域纬度的选择

%latitude=latitude0(74:435);

%longitude=longitude0(80:481);

%uwind(:,:,:,i)=uwind0(80:481,74:435,:,i);

%vwind(:,:,:,i)=vwind0(80:481,74:435,:,i);

latitude=latitude0;

longitude=longitude0;

uwind=uwind0;

vwind=vwind0;

end

%% create the merged netcdf file to store the result.

filename = 'wind10.nc'; %合成的nc文件名称

% cmode 选择,help netcdf.create

%cid=netcdf.create(filename,'clobber');

cid=netcdf.create(filename,'64BIT_OFFSET'); % 64BIT_OFFSET

%define global attributes

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'Conventions','CF-1.6'); % help netcdf.putAtt

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'data_structure','grid');

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'geospatial_lat_min','-78.375 degrees');

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'geospatial_lat_max','78.375 degrees');

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'geospatial_lon_min','0.125 degrees');

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'geospatial_lon_max','359.875 degrees');

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'institution','Remote Sensing Systems (RSS)');

% define the variable dimension

dimlon=netcdf.defDim(cid,'longitude',size(longitude,1));

dimlat=netcdf.defDim(cid,'latitude',size(latitude,1));

dimtime=netcdf.defDim(cid,'time',filenumber*4); %每天有4个时间结点

% define the variable and their attributes

varid1=netcdf.defVar(cid,'time','NC_DOUBLE',dimtime); % help netcdf.defVar

netcdf.putAtt(cid,varid1,'standard_name','time');

netcdf.putAtt(cid,varid1,'long_name','Time of analysis');

netcdf.putAtt(cid,varid1,'units','hours since 1987-01-01 00:00:00');

netcdf.putAtt(cid,varid1,'delta_t','0000-00-00 06:00:00');

netcdf.putAtt(cid,varid1,'calendar','standard');

netcdf.putAtt(cid,varid1,'valid_min',min(time));

netcdf.putAtt(cid,varid1,'valid_max',max(time));

netcdf.putAtt(cid,varid1,'axis','T');

%varid2=netcdf.defVar(cid,'latitude','NC_FLOAT',dimlat);

varid2=netcdf.defVar(cid,'latitude','NC_DOUBLE',dimlat); % NC_DOUBLE 要求的内存,基本是 NC_FLOAT 的2倍

netcdf.putAtt(cid,varid2,'standard_name','latitude');

netcdf.putAtt(cid,varid2,'units','degrees_north');

netcdf.putAtt(cid,varid2,'long_name','Latitude in degrees north');

netcdf.putAtt(cid,varid2,'valid_min',min(latitude));

netcdf.putAtt(cid,varid2,'valid_max',max(latitude));

netcdf.putAtt(cid,varid2,'axis','Y');

%varid3=netcdf.defVar(cid,'longitude','NC_FLOAT',dimlon);

varid3=netcdf.defVar(cid,'longitude','NC_DOUBLE',dimlon);

netcdf.putAtt(cid,varid3,'standard_name','longitude');

netcdf.putAtt(cid,varid3,'units','degrees_east');

netcdf.putAtt(cid,varid3,'long_name','Longitude in degrees east');

netcdf.putAtt(cid,varid3,'valid_min',min(longitude));

netcdf.putAtt(cid,varid3,'valid_max',max(longitude));

netcdf.putAtt(cid,varid3,'axis','X');

%varid4=netcdf.defVar(cid,'u10m','NC_FLOAT',[dimlon dimlat dimtime]);

varid4=netcdf.defVar(cid,'u10m','NC_DOUBLE',[dimlon dimlat dimtime]);

netcdf.putAtt(cid,varid4,'standard_name','eastward_wind');

netcdf.putAtt(cid,varid4,'long_name','u-wind vector component at 10 meters');

netcdf.putAtt(cid,varid4,'units','m s-1');

netcdf.putAtt(cid,varid4,'_FillValue',-9999);

netcdf.putAtt(cid,varid4,'coordinates','time latitude longitude')

netcdf.putAtt(cid,varid4,'valid_min',min(uwind(:)));

netcdf.putAtt(cid,varid4,'valid_max',max(uwind(:)));

varid5=netcdf.defVar(cid,'v10m','NC_FLOAT',[dimlon dimlat dimtime]);

%varid5=netcdf.defVar(cid,'v10m','NC_DOUBLE',[dimlon dimlat dimtime]);

netcdf.putAtt(cid,varid5,'standard_name','northward_wind');

netcdf.putAtt(cid,varid5,'long_name','v-wind vector component at 10 meters');

netcdf.putAtt(cid,varid5,'units','m s-1');

netcdf.putAtt(cid,varid5,'_FillValue',-9999);

netcdf.putAtt(cid,varid5,'coordinates','time latitude longitude')

netcdf.putAtt(cid,varid5,'valid_min',min(vwind(:)));

netcdf.putAtt(cid,varid5,'valid_max',max(vwind(:)));

% nobs 变量未加进去;

netcdf.endDef(cid);

% end define the varible and attributes

%% write variables value to merged netcdf file

netcdf.putVar(cid,varid1,time);

netcdf.putVar(cid,varid2,latitude);

netcdf.putVar(cid,varid3,longitude);

netcdf.putVar(cid,varid4,uwind);

netcdf.putVar(cid,varid5,vwind);

% 添加存储空间属性

netcdf.reDef(cid); %data mode 不能进行使用 putAtt,故进入 def mode;

lst=dir(filename); xi=lst.bytes;

netcdf.putAtt(cid,netcdf.getConstant('NC_GLOBAL'),'space size',strcat(num2str(xi/1024/1024),'Mb'));

%ncdisp(filename,'/','full');

netcdf.close(cid);问题:内存占比太高!

单独的10个文件,占用空间大约270Mb,而此程序生成的文件是550多Mb,多了接近一倍。

问题出在了

double类型(NC_DOUBLE)上,原文件是single(NC_FLOAT),而使用single,会在netcdf.endDef(cid)提示error:错误使用 netcdflib NetCDF 库在执行 'endDef' 函数期间遇到错误 - 'Not a valid data type or _FillValue type mismatch (NC_EBADTYPE)'。 出错 netcdf.endDef (line 33) netcdflib('endDef', ncid); 出错 Copy_2_of_merge_ccmp (line 133) netcdf.endDef(cid);怎么解决?

减轻内存负担的方法:选择区域存储信息~~

待了解实现:

—————————————

参考资料

NDBC,National Data Buoy Center,需要到火狐浏览器打开;

官网历史数据下载:https://www.ndbc.noaa.gov/historical_data.shtml

选择所需下载数据类型,打开浮标年份,下载当年的gzip文件即可;

https://www.ndbc.noaa.gov/measdes.shtml#cwind

Measurement Descriptions and Units:包含实时和历史数据文件中各变量的描述

Measurement Descriptions and Units(released in 20220211):包含实时和历史数据文件中各变量的描述

Real Time files & Historical files

Real Time files generally contain the last 45 days of “Realtime” data - data that went through automated quality checks and were distributed as soon as they were received. Historical files have gone through post-processing analysis and represent the data sent to the archive centers.

The formats for both are generally the same, with the major difference being the treatment of missing data. Missing data in the Realtime files are denoted by “MM” while a variable number of 9’s are used to denote missing data in the Historical files, depending on the data type (for example: 999.0 99.0).

General

Units: Station pages display the current hour’s measurements in English units by default, but can be changed by the viewer to metric units. When accessing Real Time and Historical data files, the measurements are generally in metric units, as described below, and cannot be changed.

英制单位(English units)和米制单位(metric units)的不同参考连接:https://zhuanlan.zhihu.com/p/77853579

Time: Station pages show current observations in station local time by default, but can be changed by the viewer to UTC (formerly GMT). Both Realtime and Historical files show times in UTC only. See the Acquisition Time help topic: Do NDBC's meteorological and oceanographic sensors measure data for the entire hour? for a more detailed description of observation times. For more information on the times in the files, see the changes page: Important NDBC Web Site Changes.

- GMT = UTC+0

- UTC+8 是北京时间;

Station ID: Five-digit WMO Station Identifier: How are the station ID numbers created?, used since 1976. ID’s can be reassigned to future deployments within the same 1 degree square.

Formats: Data are classified according to the following groups. The header lines are shown at the beginning of group. Note that in the Realtime files, non-data lines begin with “#”. Such lines should be treated as comment lines.

help topic: Do NDBC’s meteorological and oceanographic sensors measure data for the entire hour?

help topic: Do NDBC's meteorological and oceanographic sensors measure data for the entire hour?

Sensors that are installed on board moored buoys and at C-MAN sites generally do not measure and record data for the entire hour. Continuously recording data drastically increases power consumption. Therefore, for most NDBC-measured environmental data, with spectral wave measurements and continuous winds being exceptions, an eight-minute period is used for data collected by sensors on board moored buoys and a two-minute acquisition period is used for data collected by sensors at C-MAN sites.

Starting in July 2004, the end-of-acquisition time is reported as the official observation time, to the hour and minute resolution. Before July 2004, the observation time is simply the rounded hour nearest to the acquisition period, not displaying minutes. July’s change makes the observation times more consistent with the data provided to the archive centers. Starting in August 1993, the end-of-acquisition time is reported as the official observation time for data provided to the national archive centers. Before August 1993, the observation time is the rounded hour nearest to the acquisition period.

Note: Wave data normally have acquisition periods that do not overlap meteorological data acquisition. Currently, wave data times are rounded to the nearest hour for 40 minute acquisition systems or nearest half hour for 20 minute acquisition systems. Reporting the actual end-of-acquisition time for wave data is under consideration. However, on some pages, wave information is displayed on the same lines as meteorological data, and will always appear to have the meteorological data time for those displays.

For ocean current measurements, Acoustic Doppler Current Profiler (ADCP) times are rounded to the nearest hour. For oceanographic data measurements, times are rounded to the nearest hour. Reporting the actual end-of-acquisition times for these data will be taken under consideration.

For Continuous Wind stations, the end-of-acquisition time is given in the record. For other stations, the actual acquisition period may be determined by knowing the station type and payload. The end-of- acquisition minute is reported for DACT and VEEP payloads, and is minute 50 for those payloads installed on moored buoys. This means that 1200 UTC data was recorded from 1142-1150 UTC. The start of acquisition is reported for all GSBP payloads, and is minute 40 for GSBP’s installed on moored buoys. This means that 1200 UTC data was recorded from 1140-1148 UTC. The end-of- acquisition minute for C-MAN sites is the top of the hour. Prior to January 1992, the end-of-acquisition time was minute 25 for Gulf of Mexico C-MAN stations. During the period from September 1993 to August 1994, the end-of-acquisition times on West Coast C-MAN sites were changed from minute 25 to the top of the hour.

However, some stations do not follow the above timing convention and have non-standard acquisition times.

Wave acquisition times are also listed on this WWW server.

changes page: Important NDBC Web Site Changes

NDBC is making several format changes to the headers of its web data files. Note! These changes apply to the data files, not the station page’s current observations listing! Click the Customer Survey link at the bottom of this page to give feedback on the changes.

Description

- A “#” sign starts header or other metadata lines (April 10th, 2007).

This will facilitate having a second header line for units of measurement (see below) and other lines interspersed in the data for significant changes in metadata, such as a station position change.

- Column headings were standardized (April 10th, 2007).

Some columns for the same measurement had different headings, depending on which data group they were in. For example, the column heading for air pressure was PRES in one list and BARO in another. PRES will be the standard for air pressure, APD will be the standard for Average Wave Period, WVHT will be the standard for Significant Wave Height, GST will be the standard for wind Gust speed.

- A second header line was added to specify units of measurement (April 10th, 2007).

The 5-day and 45-day realtime data files will be modified to include a second header line that specifies the unit of measure for each column. Generally, the units in the data files are different than the units on the station pages, which has caused misinterpretation. Note! The units are not changing - we are just adding information about the units. Raw spectral wave data files will not have the second header, as these raw data are unitless. See Measurement Descriptions and Units for more information on the units of measure used on the NDBC web site.

NOTE! This year’s monthly historical files will use the new format, beginning with the 2007 January files. Prior year historical files will remain in the old format!

Example of standard met data file/list

#YY MM DD hh mm WDIR WSPD GST WVHT DPD APD MWD PRES ATMP WTMP DEWP VIS PTDY TIDE

#yr mo dy hr mn degT m/s m/s m sec sec degT hPa degC degC degC mi hPa ft

2007 04 15 13 50 120 4.0 6.0 0.4 3 MM MM 1023.4 20.6 22.5 10.8 MM +1.7 MM

2007 04 15 12 50 140 4.0 5.0 0.4 4 MM MM 1023.0 20.4 22.4 10.3 MM +1.7 MM

2007 04 15 11 50 120 5.0 6.0 0.4 3 MM MM 1022.1 20.0 22.4 10.9 MM +0.6 MMExample of continuous wind data format file/list

#YY MM DD hh mm WDIR WSPD GDR GST GTIME

#yr mo dy hr mn degT m/s degT m/s hhmm

2007 03 05 06 20 314 8.0 320 10.0 0604

2007 03 05 06 10 315 7.8 999 99.0 9999

2007 03 05 06 00 314 7.8 999 99.0 9999Example of wave summary data file/list

#YY MM DD hh mm WVHT SwH SwP WWH WWP SwD WWD STEEPNESS APD MWD

#yr mo dy hr mn m m sec m sec - degT - sec degT

2007 03 05 05 32 1.5 0.5 11.0 1.5 9.0 W MM AVERAGE MM -99

2007 03 05 05 02 1.5 1.0 11.0 1.5 9.0 WNW MM AVERAGE MM -99Example of raw wave spectra data file/list

#YY MM DD hh mm Sep_Freq < spec_1 (freq_1) spec_2 (freq_2) spec_3 (freq_3) ... >

2007 03 05 06 30 0.143 0.000 (0.033) 0.000 (0.037) 0.000 (0.043) 0.000 (0.048) 0.000 (0.053) 0.000 (0.058) 0.000 (0.062) 0.000 (0.068) 0.000 (0.073) 0.000 (0.077) 0.000 (0.083) 0.000 (0.087) 0.000 (0.092) 0.000 (0.100) 0.000 (0.110) 0.199 (0.120) 0.176 (0.130) 0.796 (0.140) 0.550 (0.150) 0.374 (0.160) 0.702 (0.170) 0.842 (0.180) 0.527 (0.190) 0.562 (0.200) 0.725 (0.210) 0.702 (0.220) 0.761 (0.230) 0.667 (0.240) 1.170 (0.250) 0.538 (0.260) 0.679 (0.270) 0.351 (0.280) 0.421 (0.290) 0.339 (0.300) 0.304 (0.310) 0.117 (0.320) 0.152 (0.330) 0.140 (0.340) 0.105 (0.350) 0.070 (0.365) 0.105 (0.385) 0.035 (0.405) 0.047 (0.425) 0.012 (0.445) 0.012 (0.465) 0.000 (0.485) Example of raw spectral wave (alpha1) data file/list

#YY MM DD hh mm alpha1_1 (freq_1) alpha1_2 (freq_2) alpha1_3 (freq_3) ... >

2007 03 05 06 30 999.0 (0.033) 999.0 (0.037) 999.0 (0.043) 999.0 (0.048) 999.0 (0.053) 999.0 (0.058) 999.0 (0.062) 999.0 (0.068) 999.0 (0.073) 999.0 (0.077) 999.0 (0.083) 999.0 (0.087) 999.0 (0.092) 296.0 (0.100) 144.0 (0.110) 204.0 (0.120) 188.0 (0.130) 192.0 (0.140) 196.0 (0.150) 200.0 (0.160) 216.0 (0.170) 216.0 (0.180) 232.0 (0.190) 264.0 (0.200) 320.0 (0.210) 328.0 (0.220) 320.0 (0.230) 324.0 (0.240) 316.0 (0.250) 316.0 (0.260) 324.0 (0.270) 320.0 (0.280) 312.0 (0.290) 324.0 (0.300) 324.0 (0.310) 312.0 (0.320) 328.0 (0.330) 312.0 (0.340) 336.0 (0.350) 332.0 (0.365) 312.0 (0.385) 340.0 (0.405) 336.0 (0.425) 328.0 (0.445) 316.0 (0.465) 340.0 (0.485) Example of raw spectral wave (alpha2) data file/list

#YY MM DD hh mm alpha2_1 (freq_1) alpha2_2 (freq_2) alpha2_3 (freq_3) ... >

2007 03 05 06 30 999.0 (0.033) 999.0 (0.037) 999.0 (0.043) 999.0 (0.048) 999.0 (0.053) 999.0 (0.058) 999.0 (0.062) 999.0 (0.068) 999.0 (0.073) 999.0 (0.077) 999.0 (0.083) 999.0 (0.087) 999.0 (0.092) 208.0 (0.100) 144.0 (0.110) 180.0 (0.120) 184.0 (0.130) 176.0 (0.140) 196.0 (0.150) 200.0 (0.160) 216.0 (0.170) 212.0 (0.180) 196.0 (0.190) 276.0 (0.200) 328.0 (0.210) 336.0 (0.220) 328.0 (0.230) 328.0 (0.240) 312.0 (0.250) 320.0 (0.260) 324.0 (0.270) 316.0 (0.280) 320.0 (0.290) 324.0 (0.300) 320.0 (0.310) 312.0 (0.320) 328.0 (0.330) 308.0 (0.340) 340.0 (0.350) 336.0 (0.365) 304.0 (0.385) 4.0 (0.405) 8.0 (0.425) 316.0 (0.445) 300.0 (0.465) 348.0 (0.485) Example of raw spectral wave (r1) data file/list

#YY MM DD hh mm r1_1 (freq_1) r1_2 (freq_2) r1_3 (freq_3) ... >

2007 03 05 06 30 999.00 (0.033) 999.00 (0.037) 999.00 (0.043) 999.00 (0.048) 999.00 (0.053) 999.00 (0.058) 999.00 (0.062) 999.00 (0.068) 999.00 (0.073) 999.00 (0.077) 999.00 (0.083) 999.00 (0.087) 999.00 (0.092) 0.30 (0.100) 0.33 (0.110) 0.56 (0.120) 0.81 (0.130) 0.72 (0.140) 0.87 (0.150) 0.94 (0.160) 0.91 (0.170) 0.81 (0.180) 0.59 (0.190) 0.63 (0.200) 0.81 (0.210) 0.81 (0.220) 0.84 (0.230) 0.89 (0.240) 0.86 (0.250) 0.76 (0.260) 0.91 (0.270) 0.80 (0.280) 0.78 (0.290) 0.91 (0.300) 0.70 (0.310) 0.91 (0.320) 0.77 (0.330) 0.76 (0.340) 0.78 (0.350) 0.84 (0.365) 0.80 (0.385) 0.64 (0.405) 0.64 (0.425) 0.68 (0.445) 0.67 (0.465) 0.74 (0.485) Example of raw spectral wave (r2) data file/list

#YY MM DD hh mm r2_1 (freq_1) r2_2 (freq_2) r2_3 (freq_3) ... >

2007 03 05 06 30 999.00 (0.033) 999.00 (0.037) 999.00 (0.043) 999.00 (0.048) 999.00 (0.053) 999.00 (0.058) 999.00 (0.062) 999.00 (0.068) 999.00 (0.073) 999.00 (0.077) 999.00 (0.083) 999.00 (0.087) 999.00 (0.092) 0.11 (0.100) 0.41 (0.110) 0.42 (0.120) 0.67 (0.130) 0.45 (0.140) 0.69 (0.150) 0.84 (0.160) 0.76 (0.170) 0.69 (0.180) 0.34 (0.190) 0.20 (0.200) 0.62 (0.210) 0.60 (0.220) 0.58 (0.230) 0.72 (0.240) 0.56 (0.250) 0.38 (0.260) 0.73 (0.270) 0.42 (0.280) 0.40 (0.290) 0.76 (0.300) 0.20 (0.310) 0.73 (0.320) 0.38 (0.330) 0.34 (0.340) 0.39 (0.350) 0.59 (0.365) 0.45 (0.385) 0.32 (0.405) 0.20 (0.425) 0.16 (0.445) 0.08 (0.465) 0.42 (0.485) Example of oceanographic data file/list

#YY MM DD hh mm DEPTH OTMP COND SAL O2% O2PPM CLCON TURB PH EH

#yr mo dy hr mn m degC mS/cm psu % ppm ug/l FTU - mv

2007 04 27 11 00 2 25.13 55.18 36.45 33.1 2.21 0.06 MM 0.00 98.28

2007 04 27 10 00 2 25.15 55.27 36.45 33.3 2.22 0.06 MM 0.00 95.93

2007 04 27 09 00 2 25.15 55.37 36.45 33.3 2.21 0.06 MM 0.00 94.80Example of solar radiation data file/list

#YY MM DD hh mm SRAD1 SWRAD LWRAD

#yr mo dy hr mn w/m2 w/m2 w/m2

2007 03 05 06 30 0.0 MM MM

2007 03 05 06 00 0.0 MM MMExample of DART data file/list

#YY MM DD hh mm ss T HEIGHT

#yr mo dy hr mn s - m

2007 03 05 06 00 00 1 5842.604

2007 03 05 05 45 00 1 5842.586

2007 03 05 05 30 00 1 5842.566Example of ocean current (adcp) data file/list

#YY MM DD hh mm DEP01 DIR01 SPD01 DEP02 DIR02 SPD02 DEP03 DIR03 SPD03 DEP04 DIR04 SPD04 DEP05 DIR05 SPD05 DEP06 DIR06 SPD06 DEP07 DIR07 SPD07 DEP08 DIR08 SPD08 DEP09 DIR09 SPD09 DEP10 DIR10 SPD10 DEP11 DIR11 SPD11 DEP12 DIR12 SPD12 DEP13 DIR13 SPD13 DEP14 DIR14 SPD14 DEP15 DIR15 SPD15 DEP16 DIR16 SPD16 DEP17 DIR17 SPD17 DEP18 DIR18 SPD18 DEP19 DIR19 SPD19 DEP20 DIR20 SPD20

#yr mo dy hr mn m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s m degT cm/s

2007 03 05 06 30 2 150 7 3 160 7 5 180 6 7 180 7 9 190 7 11 200 8 13 210 8 15 210 8 17 210 9 19 210 9 21 210 9 23 220 9 25 230 8 27 180 2Example of expanded ocean current (adcp2) data file/list

#YY MM DD hh mm I Bin Depth Dir Speed ErrVl VerVl %Good3 %Good4 %GoodE EI1 EI2 EI3 EI4 CM1 CM2 CM3 CM4 Flags

#yr mo dy hr mn - - m degT cm/s cm/s cm/s % % % - - - - - - - - -

2007 03 05 06 20 0 1 51.0 97 12.6 -0.5 -3.5 99 99 99 224 220 216 227 0 0 0 0 133313330Example of hourly rain data file/list

#YY MM DD hh mm ACCUM

#yr mo dy hr mn mm

2007 03 05 04 30 0.0

2007 03 05 03 30 0.0

2007 03 05 02 30 0.0 Example of 10 minute rain (rain10) data file/list

#YY MM DD hh mm RATE

#yr mo dy hr mn mm/h

2007 03 05 05 30 0.0

2007 03 05 05 20 0.0

2007 03 05 05 10 0.0 Example of 24 hour rain (rain24) data file/list

#YY MM DD hh mm RATE PCT SDEV

#yr mo dy hr mn mm/h % -

2007 03 04 12 00 0.0 0.0 0.0

2007 03 03 12 00 0.0 0.0 0.0 Example of derived meteorological data file/list

#YY MM DD hh mm CHILL HEAT ICE WSPD10 WSPD20

#yr mo dy hr mn degC degC in/hr m/s m/s

2007 03 05 20 50 MM MM MM 8 8

2007 03 05 19 50 MM MM MM 9 9

2007 03 05 18 50 MM MM MM 8 9Example of PIRATA and drifter data files/list

#YY MM DD hhmm LAT LON WDIR WSPD GST PRES PTDY ATMP WTMP

#yr mo dy hrmn deg deg degT m/s m/s hPa hPa degC degC

2007 03 06 1700 11.50 -38.01 040 9.3 MM MM MM 25.0 25.1

2007 03 06 1600 11.50 -38.02 040 9.3 MM MM MM 24.9 25.1

2007 03 06 1400 11.50 -38.03 050 8.8 MM MM MM 25.0 25.1Example of supplemental data file/list

#YY MM DD hh mm PRES PTIME WSPD WDIR WTIME

#yr mo dy hr mn hPa hhmm m/s degT hhmm

2007 03 06 12 00 MM MM 10.0 44 1145

2007 03 06 11 00 MM MM 10.0 33 1037

2007 03 06 10 00 MM MM 10.5 40 0945Example of ship obs data file/list

#SHIP_ID YY MM DD hh LAT LON WDIR WSPD GST WVHT DPD APD MWD PRES ATMP WTMP DEWP VIS PTDY TCC S1HT S1PD S1DIR S2HT S2PD S2DIR II IE IR IC IS Ib ID Iz

#station yr mo dy hr deg deg degT m/s m/s m sec sec degT hPa degC degC degC mi hPa 8th m sec degT m sec degT - - - - - - - -

SHIP 2007 03 05 21 71.6 22.5 190 15.0 MM 2.1 5 MM MM 1017.9 -0.5 MM -6.9 MM -1.2 MM MM MM MM MM MM MM MM MM MM MM MM MM MM MM

46633 2007 03 05 21 44.3 -131.3 MM MM MM MM MM MM MM 1010.5 MM 9.8 MM MM -1.1 MM MM MM MM MM MM MM MM MM MM MM MM MM MM MMFeedback - Please take this Customer Survey to let us know what you think of these changes.

Station Identifier: How are the station ID numbers created?

The World Meteorological Organization (WMO) assigns a 5-character alpha-numeric station identifier to all weather observation stations, including moored buoys, drifting buoys, and C-Man. Generally, these IDs are location specific, except for drifting buoys which retain their identifier assigned by deployment location. Before 1977, however, the moored buoy IDs were of the following form: EB-## (e.g., EB-4, EB-12), which bore no relation to its location. In the data inventory summary: Data Availability Summary for NDBC Platforms, EB IDs are listed under the appropriate station ID, if applicable.

The WMO station identification system is very simple. Identifiers are in the form of “&&###” where “&&” represents a WMO oceanic or continental region and ### denotes a specific location (e.g., 46042, 41003). With respect to regions, 32 denotes stations in the Pacific off the coast of South America, 41 – the Atlantic off of the southeast U.S. coast, 44 – the Atlantic Ocean north of North Carolina, 42 – the Gulf of Mexico, 45 – the Great Lakes, 46 – the U.S. coastal Pacific Ocean, 51 – the Hawaiian Islands, 52 – Guam.

Station identifiers for C-MAN sites in the U.S. are determined through a national system. It is alphanumeric with the format: AAAS#. “S#” is the first alphabetic letter for the state where the C-MAN site is located followed by the number of its location in alphabetized order of that state in ascending sequence (L1 – Louisiana, N6 – New York, N7 – North Carolina). “AAA” is composed of alphabetic letters and is an abbreviation of the location. As an example, Grand Isle, LA is represented by GDIL1, Lake Worth, FL – LKWF1, and Tatoosh Island, WA – TTIW1.

C-MAN stations that are a part of the former WESTPAC-AMOS program are identified using the WMO system, since WESTPAC data were transmitted internationally. WESTPAC stations were identified by 91###, where ### is the number assigned to the specific location.

data inventory summary: Data Availability Summary for NDBC Platforms

data inventory summary: Data Availability Summary for NDBC Platforms

Below is a list of NDBC buoy and C-MAN stations. Click on the link for a station to see a summary of the types of data available for that station.

ATLANTIC

- EB01

- EB91 (EB52)

- 41001 - EAST HATTERAS - 150 NM East of Cape Hatteras

- 41002 - SOUTH HATTERAS - 225 NM South of Cape Hatteras

- 41003

- 41004 - EDISTO - 41 NM Southeast of Charleston, SC

- 41005

- 41006

- 41007

- 41008 - GRAYS REEF - 40 NM Southeast of Savannah, GA

- 41009 - CANAVERAL 20 NM East of Cape Canaveral, FL

- 41010 - CANAVERAL EAST - 120NM East of Cape Canaveral

- 41011

- 41012 - St. Augustine, FL 40NM ENE of St Augustine, FL

- 41015

- 41016

- 41017

- 41018

- 41021 - Olympic Northeast

- 41022 - Olympic Southwest

- 41023 - Olympic Southwest

- 41025 - Diamond Shoals, NC

- 44001

- 44003

- 44004 - HOTEL 200NM East of Cape May,NJ

- 44005 - GULF OF MAINE - 78 NM East of Portsmouth, NH

- 44006

- 44007 - PORTLAND - 12 NM Southeast of Portland,ME

- 44008 - NANTUCKET 54 NM Southeast of Nantucket

- 44009 - DELAWARE BAY 26 NM Southeast of Cape May, NJ

- 44010

- 44011 - GEORGES BANK 170 NM East of Hyannis, MA

- 44012

- 44013 - BOSTON 16 NM East of Boston, MA

- 44014 - VIRGINIA BEACH 64 NM East of Virginia Beach, VA

- 44015

- 44017 - MONTAUK POINT - 23 NM SSW of Montauk Point, NY

- 44018 - CAPE COD - 9 NM North of Provincetown, MA

- 44019

- 44023

- 44025 - LONG ISLAND - 30 NM South of Islip, NY

- 44026

- 44028 - Buzzard's Bay

GREAT LAKES

- 45001 - MID SUPERIOR- 60 NM North Northeast Hancock, MI

- 45002 - NORTH MICHIGAN- Halfway between North Manitou and Washington Islands.

- 45003 - NORTH HURON - 32NM Northeast of Alpena, MI

- 45004 - EAST SUPERIOR -70 NM NE Marquette, MI

- 45005 - WEST ERIE - 16 NM NW of Lorain, OH

- 45006 - WEST SUPERIOR - 30NM NE of Outer Island, WI

- 45007 - SOUTH MICHIGAN - 43NM East Southeast of Milwaukee, WI

- 45008 - SOUTH HURON - 43NM East of Oscoda, MI

- 45009

- 45010

- 45012 - EAST Lake Ontario - 20NM North Northeast of Rochester, NY

GULF OF MEXICO

- EB10

- EB31

- EB32

- EB36

- EB52

- EB53

- EB61

- EB62

- EB92 (EB61)

- 42001 - MID GULF - 180 nm South of Southwest Pass, LA

- 42002 - WEST GULF - 207 NM East of Brownsville, TX

- 42003 - East GULF - 208 NM West of Naples, FL

- 42004

- 42005

- 42006

- 42007 - BILOXI - 22 NM SSE of Biloxi, MS

- 42008

- 42009

- 42010

- 42011

- 42012 - ORANGE BEACH - 44 NM SE of Mobile, AL

- 42015

- 42016

- 42017

- 42018

- 42019 - FREEPORT, TX - 60 NM South of Freeport, TX

- 42020 - CORPUS CHRISTI, TX - 60NM SSE of Corpus Christi, TX

- 42025

- 42035 - GALVESTON,TX - 22 NM East of Galveston, TX

- 42036 - WEST TAMPA - 112 NM WNW of Tampa, FL

- 42037

- 42039 - PENSACOLA - 115NM SSE of Pensacola, FL

- 42040 - LUKE OFFSHORE TEST PLATFORM - 63 NM South of Dauphin Island, AL

- 42041 - North Mid Gulf 110NM South of Grand Isle, LA

- 42042 - Pascagoula

- 42054 - East Gulf

NORTH PACIFIC AND GULF OF ALASKA

- EB33

- EB35

- EB43

- EB70

- EB90 (EB02)

- 46001 - WESTERN GULF OF ALASKA - 175NM SE of Kodiak, AK

- 46002 - WEST OREGON - 275NM West of Coos Bay, OR

- 46003 - S ALEUTIANS

- 46004 - Middle Nomad

- 46005 - WEST WASHINGTON - 300NM West of Aberdeen, WA

- 46006 - SOUTHEAST PAPA - 600NM West of Eureka, CA

- 46007

- 46008

- 46009

- 46010

- 46011 - SANTA MARIA - 21NM NW of Point Arguello, CA

- 46012 - HALF MOON BAY - 24NM SSW of San Francisco, CA

- 46013 - BODEGA BAY - 48NM NW of San Francisco, CA

- 46014 - PT ARENA - 19NM North of Point Arena, CA

- 46015 - PORT ORFORD - 15 NM West of Port Orford, OR

- 46016

- 46017

- 46018

- 46019

- 46020

- 46021

- 46022 - EEL RIVER - 17NM WSW of Eureka, CA

- 46023 - PT ARGUELLO - 17NM WNW of Point Arguello, CA

- 46024

- 46025 - Santa Monica Basin - 33NM WSW of Santa Monica, CA

- 46026 - SAN FRANCISCO - 18NM West of San Francisco, CA

- 46027 - ST GEORGES - 8 NM NW of Crescent City, CA

- 46028 - CAPE SAN MARTIN - 55NM West NW of Morro Bay, CA

- 46029 - COLUMBIA RIVER BAR - 20NM West of Columbia River Mouth

- 46030 - BLUNTS REEF

- 46031

- 46032

- 46033

- 46034

- 46035 - CENTRAL BERING SEA - 310 NM North of Adak, AK

- 46036 - South Nomad

- 46037

- 46038

- 46039

- 46040

- 46041 - CAPE ELIZABETH - 45NM NW of Aberdeen, WA

- 46042 - MONTEREY - 27NM WNW of Monterey, CA

- 46043

- 46045 - REDONDO BEACH

- 46047 - TANNER BANK - 121 NM West of San Diego, CA

- 46048

- 46050 - STONEWALL BANK - 20NM West of Newport, OR

- 46051 - Harvest Experiment Platform

- 46053 - EAST SANTA BARBARA - 12NM Southwest of Santa Barbara, CA

- 46054 - WEST SANTA BARBARA 38 NM West of Santa Barbara, CA

- 46059 - WEST CALIFORNIA - 357NM West of San Francisco, CA

- 46060 - WEST ORCA BAY - 8NM NW of Hinchinbrook Is., AK

- 46061 - Seal Rocks - Between Montague and Hinchinbrook Islands, AK

- 46062 - Pt. San Luis, CA - 18 NM South Southwest of Morro Bay, CA

- 46063 - Pt.Conception, CA - 50NM West of Santa Barbara, CA

- 46066 - SOUTH KODIAK - 310NM SSW of Kodiak, AK

- 46072 - CENTRAL ALEUTIANS 230 NM SW Dutch Harbor

- 46079 - Barren Island

- 46080 - PORTLOCK BANK- 76 NM ENE of Kodiak, AK

- 46082 - Cape Suckling - 35 NM SE of Kayak Is, AK

- 46083 - FAIRWEATHER GROUND - 105 NM West of Juneau, AK

- 46084 - CAPE EDGECUMBE - 25NM SSW of Cape Edgecumbe, AK

HAWAIIAN ISLANDS

- 51001 - NORTHWESTERN HAWAII ONE - 188 NM NW of Kauai Island, HI

- 51002 - SOUTHWEST HAWAII - 215NM SSW of Hilo, HI

- 51003 - WESTERN HAWAII - 205 NM SW of Honolulu, HI

- 51004 - SOUTHEAST HAWAII - 205 NM Southeast of Hilo, HI

- 51005

- 51026 - Northern Molokai

- 51027

- 51028 - Christmas Island

WESTERN PACIFIC

SOUTH PACIFIC

COASTAL-MARINE AUTOMATED NETWORK (C-MAN) C-MAN FIXED STATIONS

- ABAN6 - Alexandria Bay, NY

- ALRF1 - Alligator Reef

- ALSN6 - Ambrose Light, NY

- AUGA2 - Augustine Island, AK

- BLIA2 - Bligh Reef Light, AK

- BURL1 - Southwest Pass, LA

- BUSL1 - Bullwinkle Block 65

- BUZM3 - Buzzards Bay, MA

- CARO3 - Cape Arago, OR

- CDRF1 - Cedar Key, FL

- CHLV2 - Chesapeake Light, VA

- CLKN7 - Cape Lookout, NC

- CSBF1 - Cape San Blas, FL

- DBLN6 - Dunkirk, NY

- DESW1 - Destruction Island, WA

- DISW3 - Devils Island, WI

- DPIA1 - Dauphin Island, AL

- DRFA2 - Drift River Terminal, AK

- DRYF1 - Dry Tortugas, FL

- DSLN7 - Diamond Shls Lt., NC

- DUCN7 - Duck Pier, NC

- FBIS1 - Folly Island, SC

- FFIA2 - Five Fingers, AK

- FPSN7 - Frying Pan Shoals, NC

- FWYF1 - Fowey Rock, FL

- GBCL1 - Garden Banks

- GDIL1 - Grand Isle, LA

- GLLN6 - Galloo Island, NY

- IOSN3 - Isle of Shoals, NH

- KTNF1 - Keaton Beach, FL

- LKWF1 - 8722670 - Lake Worth Pier, FL

- LNEL1 - Lena

- LONF1 - Long Key, FL

- LSCM4 - Lake St. Clair Light, MI

- MDRM1 - Mt. Desert Rock, ME

- MISM1 - Matinicus Rock, ME

- MLRF1 - Molasses Reef, FL

- MPCL1 - Main Pass

- MRKA2 - Middle Rock Light, AK

- NWPO3 - Newport, OR

- PILA2 - Pilot Rock, AK

- PILM4 - Passage Island, MI

- POTA2 - Potato Point, AK

- PTAC1 - Point Arena, CA

- PTAT2 - Port Aransas, TX

- PTGC1 - Point Arguello, CA

- ROAM4 - Rock of Ages, MI

- SANF1 - Sand Key, FL

- SAUF1 - St. Augustine, FL

- SBIO1 - South Bass Island, OH

- SGNW3 - Sheboygan, WI

- SISW1 - Smith Island, WA

- SJLF1 - St. John's Light

- SMKF1 - Sombrero Key, FL

- SPGF1 - Settlement Point, GBI, Bahamas

- SRST2 - Sabine Pass, TX

- STDM4 - Stannard Rock, MI

- SUPN6 - Superior Shoals, NY

- SVLS1 - Savannah Light, GA

- THIN6 - Thousand I. Brdg., NY

- TPLM2 - Thomas Point, MD

- TTIW1 - Tatoosh Island, WA

- VENF1 - Venice, FL

- WPOW1 - West Point, WA

C-MAN WESTPAC STATIONS

- 91204 - Ulithi Is., Caroline

- 91222 - Pagan Is.,Mariana

- 91251 - Enewetak, MarShall

- 91328 - Ulul Atoll, Caroline

- 91338 - Satawan Atoll, Caro.

- 91343 - Oroluk, Caroline

- 91352 - Pingelap, Caroline

- 91356 - Kosrae, Caroline

- 91365 - Ujae Atoll, Marshall

- 91374 - Maloelap, Marshall

- 91377 - Mili, Marshalls Is.

- 91411 - Ngulu Atoll, Caroline

- 91442 - Ebon Atoll, Caroline

- FARP2 - Faraulep, Carolines Island

Tsunameters

- 21413 - SOUTHEAST TOKYO - 700NM ESE of Tokyo, JP

- 21414 - AMCHITKA - 170 NM South of Amchitka, AK

- 21415 - ATTU - 175 NM South of Attu, AK

- 21416 - KAMCHATKA PENINSULA - 240NM SE of Kamchatka Peninsula, RU

- 21417 - 290NM SE of the Kuril Islands

- 21418 - NORTHEAST TOKYO - 450 NM NE of Tokyo, JP

- 21419 - KURIL ISLANDS - 209NM SE of Kuril Is.

- 21420 - SOUTHEAST MIYAZAKI - 260NM Southeast of Miyazaki

- 32411 - WEST PANAMA - 710 NM WSW of Panama City, Panama

- 32412 - SOUTHWEST LIMA - 645 NM SW of Lima, Peru

- 32413 - NORTHWEST LIMA - 1000 NM WNW of Lima, Peru

- 32D12 - SOUTHWEST LIMA 645NM SW of Lima, Peru

- 32D13 - ADRIFT BUOY FROM 32413

- 41420 - NORTH SANTO DOMINGO - 328NM NNE of Santo Domingo, DO

- 41421 - NORTH ST THOMAS - 300 NM North of St Thomas, Virgin Is

- 41424 - EAST CHARLESTON - 370 NM East of Charleston, SC

- 41425 - SOUTHWEST BERMUDA - 200 NM SSW of Hamilton, Bermuda

- 41X24 - 370 NM East of Charleston, SC

- 42407 - SOUTH PUERTO RICO - 230 NM Southwest of San Juan, PR

- 42408 - EAST GULF of MEXICO - 225NM WNW of Loggerhead Key, FL

- 42409 - GULF OF MEXICO - 247 NM South of New Orleans, LA

- 42429 - DART WAVE GLIDER STATION

- 43412 - SOUTHWEST MANZANILLO - 240 NM SW of Manzanillo, MX

- 43413 - SOUTH ACAPULCO - 360NM South of Acapulco, MX

- 44401 - NORTHEAST CASTLE ROCK SEAMOUNT - 620NM South of St John's Newfoundland, CN

- 44402 - SOUTHEAST BLOCK CANYON - 130 NM SE of Fire Island, NY

- 44403 - SABLE ISLAND BANK - 437 NM E of Boston, MA

- 44D03 - Adrift buoy from 44403

- 46401 - SOUTHEAST ADAK - 377NM SE of Adak, AK

- 46402 - South Dutch Harbor - 220 NM SSE of Dutch Harbor, AK

- 46403 - SOUTHEAST SHUMAGIN ISLAND - 186 NM SE of Shumagin Is, AK

- 46404 - WEST ASTORIA - 230 NM West of Astoria, OR

- 46405 - 325 NM West-Southwest of Newport, OR

- 46406 - 2,900 NM Southeast of Hawaii

- 46407 - NEWPORT - 210NM West of Coos Bay, OR

- 46408 - NIKOLSKI - 212 NM South of Umnak Is, AK

- 46409 - SOUTHEAST KODIAK - 210 NM SE of Kodiak, AK

- 46410 - SOUTH CORDOVA 188NM SSE of Cordova, AK

- 46411 - Mendocino - 150 NM West of Mendocino Bay, CA

- 46412 - San Diego - 175 NM West of San Diego, CA

- 46413 - SOUTH-SOUTHEAST ADAK - 243 NM SSE of Adak Island, AK

- 46414 - SOUTHEAST CHIRIKOF - 165 NM SE of Chirikof Island, AK

- 46415 - SOUTHWEST JUNEAU - 370 NM SW of Juneau, AK

- 46416 - WEST VANCOUVER - 442NM West of Vancouver, BC, Canada

- 46419 - NORTHWEST SEATTLE - 300 NM WNW of Seattle, WA

- 51406 - CENTRAL SOUTH PACIFIC - 1040NM NNE of Pitcarin Is

- 51407 - HAWAII - 34NM West of Kailua-Kona, HI

- 51425 - NORTHWEST APIA - 370 NM NW of Apia, Samoa

- 51426 - SOUTHEAST TONGA - 400NM SE of Tonga

- 51D26 - SOUTHEAST TONGA - 400NM SE of Tonga

- 52401 - NORTHEAST SAIPAN - 610 NM ENE of Saipan

- 52402 - SOUTHEAST SAIPAN - 540NM ESE of Saipan

- 52403 - NORTH MANUS - 345NM North of Manus Is , New Guinea

- 52404 - NORTH PHILIPPINE SEA - 750 NM NE of Manila, Philippines

- 52405 - SOUTH PHILIPPINE SEA -725 NM West of Agana, Guam

- 52406 - NORTHEAST SOLOMON - 370NM NE of Guadalcanal

- 54401 - NORTHEAST NEW ZEALAND - 640NM NE of Auckland, NZ

- 54D01 - NORTHEAST NEW ZEALAND - 640NM NE of Auckland, NZ

Important Notice to Mariners: NATIONAL WEATHER SERVICE SEEKS COOPERATION TO SAFEGUARD CRITICAL DATA BUOYS

The National Weather Service is soliciting the cooperation of the marine community to safeguard offshore automated weather buoys that provide critical information, including wind speed and direction, wave height, pressure changes, and other key data about marine conditions and developing storms along the coast. The data buoys are an integral part of the comprehensive observation system that allows local forecast offices to issue weather warnings and forecasts for the protection of life and property.

Specific steps that mariners can take to safeguard the systems include:

- neither boarding nor tying-up to a data buoy;

- giving the buoy a wide berth to avoid entangling the buoy’s mooring or other equipment suspended from the buoy — 500 yards for vessels which are trailing gear, and at least 20 yards for all others;

- reporting to the U.S. Coast Guard any damage you observe to a data buoy;

- reporting to the U.S. Coast Guard any observation of people on or vessels attached to a weather buoy.

The NDBC operates a network of offshore automated weather buoys and Coastal-Marine Automated Network stations that provide hourly reports of marine weather to NWS and other agencies. The buoys, off the U.S. coasts and the Great Lakes, may be nearby or several hundred miles at sea. These stations provide hourly data to NWS forecast offices that are important to the preparation of forecasts and warnings. These data are also broadcast to the public over NOAA Weather Radio, and are posted on the Internet at the NDBC Website.

NDBC buoys have either circular or boat-shaped hulls ranging from three meters to 10 meters across, with superstructures extending five meters to 10 meters above the water. All are painted bright colors and imprinted with “NOAA” and the station number, show a yellow, group-flashing-4 (20 seconds) light characteristic, and are identified on applicable navigation charts by the five-digit station number, or as “ODAS.”

Standard Meteorological Data

#YY MM DD hh mm WDIR WSPD GST WVHT DPD APD MWD PRES ATMP WTMP DEWP VIS PTDY TIDE

#yr mo dy hr mn degT m/s m/s m sec sec degT hPa degC degC degC nmi hPa ft

2014 09 11 16 50 120 5.0 6.0 0.6 6 4.2 134 1016.5 29.3 30.5 24.4 MM +0.3 MM| WDIR | Wind direction (the direction the wind is coming from in degrees clockwise from true N) during the same period used for WSPD. SeeWind Averaging Methods |

|---|---|

| WSPD | Wind speed (m/s) averaged over an eight-minute period for buoys and a two-minute period for land stations. Reported Hourly. SeeWind Averaging Methods. |

| GST | Peak 5 or 8 second gust speed (m/s) measured during the eight-minute or two-minute period. The 5 or 8 second period can be determined by payload, See theSensor Reporting, Sampling, and Accuracy section. |

| WVHT | Significant wave height (meters) is calculated as the average of the highest one-third of all of the wave heights during the 20-minute sampling period. See theWave Measurements section. |

| DPD | Dominant wave period (seconds) is the period with the maximum wave energy. See theWave Measurements section. |

| APD | Average wave period (seconds) of all waves during the 20-minute period. See theWave Measurements section. |

| MWD | The direction from which the waves at the dominant period (DPD) are coming. The units are degrees from true North, increasing clockwise, with North as 0 (zero) degrees and East as 90 degrees. See theWave Measurements section. |

| PRES | Sea level pressure (hPa). For C-MAN sites and Great Lakes buoys, the recorded pressure is reduced to sea level using the method described inNWS Technical Procedures Bulletin 291 (11/14/80). ( labeled BAR in Historical files) |

| ATMP | Air temperature (Celsius). For sensor heights on buoys, seeHull Descriptions. For sensor heights at C-MAN stations, see C-MAN Sensor Locations |

| WTMP | Sea surface temperature (Celsius). For buoys the depth is referenced to the hull’s waterline. For fixed platforms it varies with tide, but is referenced to, or nearMean Lower Low Water (MLLW). |

| DEWP | Dewpoint temperature taken at the same height as the air temperature measurement. |

| VIS | Station visibility (nautical miles). Note that buoy stations are limited to reports from 0 to 1.6 nmi. |

| PTDY | Pressure Tendency is the direction (plus or minus) and the amount of pressure change (hPa)for a three hour period ending at the time of observation. (not in Historical files) |

| TIDE | The water level in feet above or belowMean Lower Low Water (MLLW). |

Derived Met Values

#YY MM DD hh mm CHILL HEAT ICE WSPD10 WSPD20

#yr mo dy hr mn degC degC cm/hr m/s m/s

2014 09 11 16 50 MM 34.4 MM 5 5| HEAT | For more information on heat index, please see theNWS Heat Wave page. |

|---|---|

| CHILL | Please note that NDBC uses unadjusted winds to calculate wind chill. The winds are calculated at anemometer height. For more information on wind chill, please see theNWS Wind Chill Temperature Index. |

| ICE | Estimated ice accretion in inches per hour based on an algorithm developed by Overland and Pease at the Pacific Marine Environmental Laboratory in the mid-1980s. The algorithm relates icing to the presently observed wind speed, air temperature, and sea surface temperature. The method is designed for trawlers in the 20 to 75 meter length range, underway at normal speeds in open seas and not heading downwind. In general, NWS forecasters translate ice accretion rates to the following categories: light: 0.0 to 0.24 inches of ice accretion/hour; moderate: 0.25 to 0.8 inches/hour; and heavy: greater than 0.8 inches/hour. |

| WSPD10 | The estimation of Wind Speed (WSPD) measurement raised or lowered to a height of 10 meters. NDBC uses the method of Liu et al., 1979: Bulk parameterization of air-sea exchanges in heat and water vapor including molecular constraints at the interface,Journal of Atmospheric Science, 36, pp. 1722-1735. |

| WSPD20 | The estimation of Wind Speed (WSPD) measurement raised or lowered to a height of 20 meters. NDBC uses the method of Liu et al., 1979: Bulk parameterization of air-sea exchanges in heat and water vapor including molecular constraints at the interface,Journal of Atmospheric Science, 36, pp. 1722-1735. |

Supplemental Measurements Data

#YY MM DD hh mm PRES PTIME WSPD WDIR WTIME

#yr mo dy hr mn hPa hhmm m/s degT hhmm

2014 09 11 16 50 MM MM 6 110 1603| Lowest 1 minute pressure | Lowest recorded atmospheric pressure for the hour to the nearest 0.1 hPa and the time at which it occurred (hour and minute). |

|---|---|

| Highest 1 minute wind speed | Highest recorded wind speed for the hour to the nearest 0.1 m/s, its corresponding direction to the nearest degree, and the time at which it occurred (hour and minute). |

Continuous Winds

#YY MM DD hh mm WDIR WSPD GDR GST GTIME

#yr mo dy hr mn degT m/s degT m/s hhmm

2014 09 11 16 50 117 5.2 120 6.0 1644| WDIR | Ten-minute average wind direction measurements in degrees clockwise from true North. (DIR in Historical files) |

|---|---|

| WSPD | Ten-minute average wind speed values in m/s. (SPD in Historical files) |

| GDR | Direction, in degrees clockwise from true North, of the GST, reported at the last hourly 10-minute segment. |

| GST | Maximum 5-second peak gust during the measurement hour, reported at the last hourly 10-minute segment. |

| GTIME | The minute of the hour that the GSP occurred, reported at the last hourly 10-minute segment. |

For more information on continuous winds and the timing of these measurements, see the continuous winds help section.

Detailed Wave Summary (Realtime data files only)

#YY MM DD hh mm WVHT SwH SwP WWH WWP SwD WWD STEEPNESS APD MWD

#yr mo dy hr mn m m sec m sec - degT - sec degT

2014 09 11 17 00 0.6 0.4 5.6 0.4 4.3 SE MM N/A 4.2 134| WVHT | Significant Wave Height is the average height (meters) of the highest one-third of the waves during a 20 minute sampling period. |

|---|---|

| SwH | Swell height is the vertical distance (meters) between any swell crest and the succeeding swell wave trough. |

| SwP | Swell Period is the time (usually measured in seconds) that it takes successive swell wave crests or troughs pass a fixed point. |

| WWH | Wind Wave Height is the vertical distance (meters) between any wind wave crest and the succeeding wind wave trough (independent of swell waves). |